Nginx Load Balancing

Nginx has the ability to run as a software load balancer using the http_proxy module, and it’s one of the most common and useful ways to use nginx in a web stack. The default configuration for an upstream is to balance requests using a round-robin method, round-robin load balancing is completely ignorant, so factors like the actual load of the server or number of connections is not considered.

By default, when a single request to a server inside of the upstream directive fails, the server will be removed from the pool for 10 seconds.

Load Balancing Methods

- Round Robin is the default load balancing algorithm for nginx. It chooses a server from the upstream list in sequential order and, once it chooses the last server in the list, resets back to the top.

- Least Connections picks the server that has the least number of active connections from nginx. Many often choose this thinking that it’s the fairest or most balanced option. However, often times the number of active connections is not a reliable indicator of server load.

- Sticky sessions is a load balancing method that can be used to set users to always be served by certain servers. Once a user is routed to a server, they are stuck to it and subsequent requests will be served by the same server. The open-source version of nginx implements sticky sessions by hashing based on the IP Address of the user. If the IP of the user changes, they will unstick, and the server they’re routed to may change.

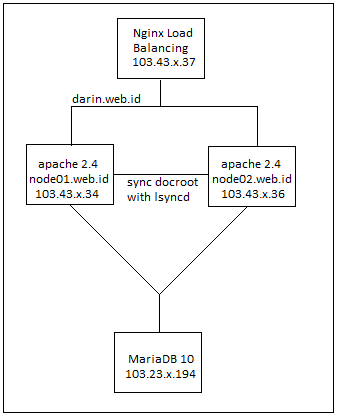

Logical topology

Preparation

- Update and upgrade every server

- Install apache, php, php-mysql on web

- Install mariadb on database

- Make sure apache running on every web

- Create user, database, and allow remote connection on database

- Test connection between web and db by create php mysql connection

- Synchronize DocumentRoot node with lsyncd (/var/www/html)

Load balancing configuration

Install nginx apt -y install nginx

Configure nginx cp -r /etc/nginx /root/ vim /etc/nginx/nginx.conf

HTTP Load Balancing

user nginx;

worker_processes auto;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

keepalive_timeout 65;

default_type application/octet-stream;

include /etc/nginx/mime.types;

include /etc/nginx/conf.d/*.conf;

upstream backend {

#you can also use hostname for server value, e.g :

#server node01.darin.web.id

server 103.43.x.34;

server 103.43.x.36;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}TCP Load Balancing

user nginx;

worker_processes auto;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

keepalive_timeout 65;

default_type application/octet-stream;

include /etc/nginx/mime.types;

include /etc/nginx/conf.d/*.conf;

}

stream {

server {

listen 80;

proxy_pass backend;

}

upstream backend {

server 103.43.47.x:80;

server 103.43.47.x:80;

}

}systemctl start nginx systemctl restart nginx

Additional knowledge of upstream directive

Weighted servers Weights are useful if you want to send more traffic to particular server because it has faster hardware, or, if you want to send less traffic to a particular server to test a change on it. Below is the example :

upstream backend {

server 103.43.x.34 weight=3; #have 3x more requests

server 103.43.x.36;

} #Because app3 has a lower weight

#It will receive only 20% of the total traffic.

upstream backend {

server node01.darin.web.id weight=2;

server node02.darin.web.id weight=2;

server node03.darin.web.id weight=1;

}Health checks In the open-source version of nginx, health checks don’t really exist. What you get out of the box are passive checks— checks that will remove a server from the pool if it causes an error a certain number of times.

The default handling is, if an incoming request to an upstream server errors out or times out once, the server will be removed from the pool for 10s. You can tweak the behavior of the health checks with below directives :

max_fails and fail_timeout

upstream backend {

#server needs to fail 2 times in 5 seconds for it to be marked unhealthy

server 103.43.x.34 max_fails=2 fail_timeout=5;

#server needs to fail 100 times in 50 seconds for it to be marked unhealthy

server 103.43.x.36 max_fails=100 fail_timeout=50;

}Removing a server from the pool

When a server is marked as down, it is considered completely unavailable and no traffic will be routed to it (you don't have to remove a server directive).

upstream backend {

server node01.darin.web.id down;

server node02.darin.web.id;

server node03.darin.web.id;

}Backup servers

node04.darin.web.id will only receive traffic if all of the hosts in the pool are marked as unavailable, its usefulness is limited to smaller workloads, as you’d need enough backup servers to handle the traffic for your entire pool.

upstream backend {

server node01.darin.web.id;

server node02.darin.web.id;

server node03.darin.web.id;

server node04.darin.web.id backup;

}Turn on least_conn algorithm

upstream backend {

least_conn;

server 103.43.x.34;

server 103.43.x.36;

}Turn on sticky sessions algorithm with ip_hash

upstream backend {

ip_hash;

server 103.43.x.34;

server 103.43.x.36;

}SSL Termination

stream {

upstream stream_backend {

server backend1.example.com:12345;

server backend2.example.com:12345;

server backend3.example.com:12345;

}

server {

listen 12345 ssl;

proxy_pass stream_backend;

ssl_certificate /etc/ssl/certs/server.crt;

ssl_certificate_key /etc/ssl/certs/server.key;

ssl_protocols SSLv3 TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_session_cache shared:SSL:20m;

ssl_session_timeout 4h;

ssl_handshake_timeout 30s;

#...

}

}Reference : Nginx practical guide.pdf